对于初学kubernetes(以下简称k8s)的同学来说,首先要过的第一关就是安装k8s,所以先超给各位大佬写了一篇文章来记录安装过程,如果你是初学小白,只要跟着做,也能保证100%完成安装,下面开始我们的安装之旅吧,内容较多,都是干货,无任何套路~~

如果你觉得累,请看看下面这段话:我们想要去的地方,永远没有捷径,只有脚踏实地,一步一个脚印的才能走向诗和远方!

友情提示:

1.下文需要的yaml文件所在的github地址:

https://github.com/luckylucky421/kubernetes1.17.3/tree/master

大家可以把我的github仓库fork到你们自己的仓库里,觉得不错的不要忘记在我的github上点star哈~~

2.下文需要的镜像获取方式:镜像在百度网盘,镜像较大,拉取速度可能较慢,如果想要快速获取镜像,按文末方式获取

链接:https://pan.baidu.com/s/1UCldCLnIrDpE5NIoMqvXIg 提取码:xk3yk8s-master(192.168.124.16)配置:

操作系统:centos7.4、centos7.5、centos7.6以及更高版本都可以配置:4核cpu,8G内存,两块60G硬盘网络:桥接网络k8s-node(192.168.124.26)配置:

操作系统:centos7.6配置:4核cpu,4G内存,两块60G硬盘网络:桥接网络把虚拟机或者物理机配置成静态ip地址,这样机器重新启动后ip地址也不会发生改变。

修改 /etc/sysconfig/network-scripts/ifcfg-ens33文件,变成如下:

TYPE=EthernetPROXY_METHOD=noneBROWSER_ONLY=noBOOTPROTO=staticIPADDR=192.168.124.16NETMASK=255.255.255.0GATEWAY=192.168.124.1DNS1=192.168.124.1DEFROUTE=yesIPV4_FAILURE_FATAL=noIPV6INIT=yesIPV6_AUTOCONF=yesIPV6_DEFROUTE=yesIPV6_FAILURE_FATAL=noIPV6_ADDR_GEN_MODE=stable-privacyNAME=ens33DEVICE=ens33ONBOOT=yes修改配置文件之后需要重启网络服务才能使配置生效,重启网络服务命令如下:service network restart

ifcfg-ens33文件配置解释:

IPADDR=192.168.124.16 #ip地址,需要跟自己电脑所在网段一致NETMASK=255.255.255.0 #子网掩码,需要跟自己电脑所在网段一致GATEWAY=192.168.124.1 #网关,在自己电脑打开cmd,输入ipconfig /all可看到DNS1=192.168.124.1 #DNS,在自己电脑打开cmd,输入ipconfig /all可看到修改 /etc/sysconfig/network-scripts/ifcfg-ens33文件,变成如下:

TYPE=EthernetPROXY_METHOD=noneBROWSER_ONLY=noBOOTPROTO=staticIPADDR=192.168.124.26NETMASK=255.255.255.0GATEWAY=192.168.124.1DNS1=192.168.124.1DEFROUTE=yesIPV4_FAILURE_FATAL=noIPV6INIT=yesIPV6_AUTOCONF=yesIPV6_DEFROUTE=yesIPV6_FAILURE_FATAL=noIPV6_ADDR_GEN_MODE=stable-privacyNAME=ens33DEVICE=ens33ONBOOT=yes修改配置文件之后需要重启网络服务才能使配置生效,重启网络服务命令如下:service network restart

yum -y install wget net-tools nfs-utils lrzsz gcc gcc-c++ make cmake libxml2-devel openssl-devel curl curl-devel unzip sudo ntp libaio-devel wget vim ncurses-devel autoconf automake zlib-devel python-devel epel-release lrzsz openssh-server socat ipvsadm conntrack

systemctl stop firewalld && systemctl disable firewalld

yum install iptables-services -y

service iptables stop && systemctl disable iptables

ntpdate cn.pool.ntp.org

crontab -e* */1 * * * /usr/sbin/ntpdate cn.pool.ntp.org关闭selinux,设置永久关闭,这样重启机器selinux也处于关闭状态

修改/etc/sysconfig/selinux文件

sed -i ‘s/SELINUX=enforcing/SELINUX=disabled/‘ /etc/sysconfig/selinuxsed -i "s/SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config上面文件修改之后,需要重启虚拟机,可以强制重启:

reboot -f

swapoff -a#永久禁用,打开/etc/fstab注释掉swap那一行。sed -i ‘s/.*swap.*/#&/‘ /etc/fstabcat <<EOF > /etc/sysctl.d/k8s.confnet.bridge.bridge-nf-call-ip6tables = 1net.bridge.bridge-nf-call-iptables = 1EOFsysctl --system在k8s-master(192.168.124.16)上:

hostnamectl set-hostname k8s-master

k8s-node(192.168.124.26)上:

hostnamectl set-hostname k8s-node

在/etc/hosts文件增加如下两行:

192.168.124.16 k8s-master192.168.124.26 k8s-node在k8s-master上操作

ssh-keygen -t rsa #一直回车ssh-copy-id -i .ssh/id_rsa.pub root@192.168.124.26#上面需要输入密码,输入k8s-node物理机密码即可(1)备份原来的yum源

mv /etc/yum.repos.d/CentOS-Base.repo /etc/yum.repos.d/CentOS-Base.repo.backup

(2)下载阿里的yum源

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

(3)生成新的yum缓存

yum makecache fast

(4)配置安装k8s需要的yum源

cat <<EOF > /etc/yum.repos.d/kubernetes.repo[kubernetes]name=Kubernetesbaseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0EOF(5)清理yum缓存

yum clean all

(6)生成新的yum缓存

yum makecache fast

(7)更新软件包

yum -y update

(8)安装软件包

yum -y install yum-utils device-mapper-persistent-data lvm2

(9)添加新的软件源

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum list docker-ce --showduplicates |sort -r

yum install -y docker-ce-19*systemctl enable docker && systemctl start docker#查看docker状态,如果状态是active(running),说明docker是正常运行状态systemctl status docker cat > /etc/docker/daemon.json <<EOF{ "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2", "storage-opts": [ "overlay2.override_kernel_check=true" ]}EOFsystemctl restart docker

yum install kubeadm-1.17.3 kubelet-1.17.3systemctl enable kubeletdocker load -i kube-apiserver.tar.gzdocker load -i kube-scheduler.tar.gz docker load -i kube-controller-manager.tar.gzdocker load -i pause.tar.gz docker load -i cordns.tar.gz docker load -i etcd.tar.gzdocker load -i kube-proxy.tar.gz docker load -i cni.tar.gzdocker load -i calico-node.tar.gzdocker load -i kubernetes-dashboard_1_10.tar.gz docker load -i metrics-server-amd64_0_3_1.tar.gzdocker load -i addon.tar.gz说明:

pause版本是3.1etcd版本是3.4.3 cordns版本是1.6.5 cni版本是3.5.3 calico版本是3.5.3 apiserver、scheduler、controller-manager、kube-proxy版本是1.17.3 kubernetes dashboard版本1.10.1 metrics-server版本0.3.1 addon-resizer版本是1.8.4kubeadm init --kubernetes-version=v1.17.3 --pod-network-cidr=10.244.0.0/16 --apiserver-advertise-address 192.168.124.16

显示如下,说明初始化成功了

To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/configYou should now deploy a pod network to the cluster.Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/Then you can join any number of worker nodes by running the following on each as root:kubeadm join 192.168.124.16:6443 --token i9m8e5.z12dnlekjbmuebsk --discovery-token-ca-cert-hash sha256:2dc931b4508137fbe1bcb93dc84b7332e7e874ec5862a9e8b8fff9f7c2b57621 kubeadm join ... 这条命令需要记住,下面我们把k8s的node节点加入到集群需要在node节点输入这条命令,每次执行这个结果都是不一样的,大家记住自己执行的结果,在下面会用到

mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configkubectl get nodes 显示如下

NAME STATUS ROLES AGE VERSIONk8s-master NotReady master 2m13s v1.17.3kubeadm join 192.168.124.16:6443 --token i9m8e5.z12dnlekjbmuebsk --discovery-token-ca-cert-hash sha256:2dc931b4508137fbe1bcb93dc84b7332e7e874ec5862a9e8b8fff9f7c2b57621 上面的这个加入到k8s节点的一串命令就是在3.3初始化的时候生成的

kubectl get nodes 显示如下

NAME STATUS ROLES AGE VERSIONk8s-master NotReady master 3m48s v1.17.3k8s-node NotReady <none> 6s v1.17.3上面可看到Status状态都是NotReady,这是因为没有安装网络插件,如calico或者flannel

在master节点执行如下:kubectl apply -f calico.yaml

cat calico.yaml

#Calico Version v3.5.3#https://docs.projectcalico.org/v3.5/releases#v3.5.3#This manifest includes the following component versions:#calico/node:v3.5.3calico/cni:v3.5.3This ConfigMap is used to configure a self-hosted Calico installation.kind: ConfigMapapiVersion: v1metadata: name: calico-config namespace: kube-systemdata:#Typha is disabled. typha_service_name: "none" #Configure the Calico backend to use. calico_backend: "bird" #Configure the MTU to use veth_mtu: "1440" #The CNI network configuration to install on each node. The special #values in this config will be automatically populated. cni_network_config: |- { "name": "k8s-pod-network", "cniVersion": "0.3.0", "plugins": [ { "type": "calico", "log_level": "info", "datastore_type": "kubernetes", "nodename": "__KUBERNETES_NODE_NAME__", "mtu": __CNI_MTU__, "ipam": { "type": "host-local", "subnet": "usePodCidr" }, "policy": { "type": "k8s" }, "kubernetes": { "kubeconfig": "__KUBECONFIG_FILEPATH__" } }, { "type": "portmap", "snat": true, "capabilities": {"portMappings": true} } ] }---#This manifest installs the calico/node container, as well#as the Calico CNI plugins and network config on#each master and worker node in a Kubernetes cluster.kind: DaemonSetapiVersion: apps/v1metadata: name: calico-node namespace: kube-system labels: k8s-app: calico-nodespec: selector: matchLabels: k8s-app: calico-node updateStrategy: type: RollingUpdate rollingUpdate: maxUnavailable: 1 template: metadata: labels: k8s-app: calico-node annotations: #This, along with the CriticalAddonsOnly toleration below, #marks the pod as a critical add-on, ensuring it gets #priority scheduling and that its resources are reserved #if it ever gets evicted. scheduler.alpha.kubernetes.io/critical-pod: ‘‘ spec: nodeSelector: beta.kubernetes.io/os: linux hostNetwork: true tolerations: #Make sure calico-node gets scheduled on all nodes. - effect: NoSchedule operator: Exists #Mark the pod as a critical add-on for rescheduling. - key: CriticalAddonsOnly operator: Exists - effect: NoExecute operator: Exists serviceAccountName: calico-node #Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force #deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods. terminationGracePeriodSeconds: 0 initContainers: #This container installs the Calico CNI binaries #and CNI network config file on each node. - name: install-cni image: quay.io/calico/cni:v3.5.3 command: ["/install-cni.sh"] env: #Name of the CNI config file to create. - name: CNI_CONF_NAME value: "10-calico.conflist" #The CNI network config to install on each node. - name: CNI_NETWORK_CONFIG valueFrom: configMapKeyRef: name: calico-config key: cni_network_config #Set the hostname based on the k8s node name. - name: KUBERNETES_NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName #CNI MTU Config variable - name: CNI_MTU valueFrom: configMapKeyRef: name: calico-config key: veth_mtu #Prevents the container from sleeping forever. - name: SLEEP value: "false" volumeMounts: - mountPath: /host/opt/cni/bin name: cni-bin-dir - mountPath: /host/etc/cni/net.d name: cni-net-dir containers: #Runs calico/node container on each Kubernetes node. This #container programs network policy and routes on each #host. - name: calico-node image: quay.io/calico/node:v3.5.3 env: #Use Kubernetes API as the backing datastore. - name: DATASTORE_TYPE value: "kubernetes" #Wait for the datastore. - name: WAIT_FOR_DATASTORE value: "true" #Set based on the k8s node name. - name: NODENAME valueFrom: fieldRef: fieldPath: spec.nodeName #Choose the backend to use. - name: CALICO_NETWORKING_BACKEND valueFrom: configMapKeyRef: name: calico-config key: calico_backend #Cluster type to identify the deployment type - name: CLUSTER_TYPE value: "k8s,bgp" #Auto-detect the BGP IP address. - name: IP value: "autodetect" - name: IP_AUTODETECTION_METHOD value: "can-reach=192.168.124.56" #Enable IPIP - name: CALICO_IPV4POOL_IPIP value: "Always" #Set MTU for tunnel device used if ipip is enabled - name: FELIX_IPINIPMTU valueFrom: configMapKeyRef: name: calico-config key: veth_mtu #The default IPv4 pool to create on startup if none exists. Pod IPs will be #chosen from this range. Changing this value after installation will have #no effect. This should fall within `--cluster-cidr`. - name: CALICO_IPV4POOL_CIDR value: "10.244.0.0/16" #Disable file logging so `kubectl logs` works. - name: CALICO_DISABLE_FILE_LOGGING value: "true" #Set Felix endpoint to host default action to ACCEPT. - name: FELIX_DEFAULTENDPOINTTOHOSTACTION value: "ACCEPT" #Disable IPv6 on Kubernetes. - name: FELIX_IPV6SUPPORT value: "false" #Set Felix logging to "info" - name: FELIX_LOGSEVERITYSCREEN value: "info" - name: FELIX_HEALTHENABLED value: "true" securityContext: privileged: true resources: requests: cpu: 250m livenessProbe: httpGet: path: /liveness port: 9099 host: localhost periodSeconds: 10 initialDelaySeconds: 10 failureThreshold: 6 readinessProbe: exec: command: - /bin/calico-node - -bird-ready - -felix-ready periodSeconds: 10 volumeMounts: - mountPath: /lib/modules name: lib-modules readOnly: true - mountPath: /run/xtables.lock name: xtables-lock readOnly: false - mountPath: /var/run/calico name: var-run-calico readOnly: false - mountPath: /var/lib/calico name: var-lib-calico readOnly: false volumes: #Used by calico/node. - name: lib-modules hostPath: path: /lib/modules - name: var-run-calico hostPath: path: /var/run/calico - name: var-lib-calico hostPath: path: /var/lib/calico - name: xtables-lock hostPath: path: /run/xtables.lock type: FileOrCreate #Used to install CNI. - name: cni-bin-dir hostPath: path: /opt/cni/bin - name: cni-net-dir hostPath: path: /etc/cni/net.d---apiVersion: v1kind: ServiceAccountmetadata: name: calico-node namespace: kube-system---#Create all the CustomResourceDefinitions needed for#Calico policy and networking mode.apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata: name: felixconfigurations.crd.projectcalico.orgspec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: FelixConfiguration plural: felixconfigurations singular: felixconfiguration---apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata: name: bgppeers.crd.projectcalico.orgspec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: BGPPeer plural: bgppeers singular: bgppeer---apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata: name: bgpconfigurations.crd.projectcalico.orgspec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: BGPConfiguration plural: bgpconfigurations singular: bgpconfiguration---apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata: name: ippools.crd.projectcalico.orgspec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: IPPool plural: ippools singular: ippool---apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata: name: hostendpoints.crd.projectcalico.orgspec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: HostEndpoint plural: hostendpoints singular: hostendpoint---apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata: name: clusterinformations.crd.projectcalico.orgspec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: ClusterInformation plural: clusterinformations singular: clusterinformation---apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata: name: globalnetworkpolicies.crd.projectcalico.orgspec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: GlobalNetworkPolicy plural: globalnetworkpolicies singular: globalnetworkpolicy---apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata: name: globalnetworksets.crd.projectcalico.orgspec: scope: Cluster group: crd.projectcalico.org version: v1 names: kind: GlobalNetworkSet plural: globalnetworksets singular: globalnetworkset---apiVersion: apiextensions.k8s.io/v1beta1kind: CustomResourceDefinitionmetadata: name: networkpolicies.crd.projectcalico.orgspec: scope: Namespaced group: crd.projectcalico.org version: v1 names: kind: NetworkPolicy plural: networkpolicies singular: networkpolicy---#Include a clusterrole for the calico-node DaemonSet,#and bind it to the calico-node serviceaccount.kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1beta1metadata: name: calico-noderules: #The CNI plugin needs to get pods, nodes, and namespaces. - apiGroups: [""] resources: - pods - nodes - namespaces verbs: - get - apiGroups: [""] resources: - endpoints - services verbs: #Used to discover service IPs for advertisement. - watch - list #Used to discover Typhas. - get - apiGroups: [""] resources: - nodes/status verbs: #Needed for clearing NodeNetworkUnavailable flag. - patch #Calico stores some configuration information in node annotations. - update #Watch for changes to Kubernetes NetworkPolicies. - apiGroups: ["networking.k8s.io"] resources: - networkpolicies verbs: - watch - list #Used by Calico for policy information. - apiGroups: [""] resources: - pods - namespaces - serviceaccounts verbs: - list - watch #The CNI plugin patches pods/status. - apiGroups: [""] resources: - pods/status verbs: - patch #Calico monitors various CRDs for config. - apiGroups: ["crd.projectcalico.org"] resources: - globalfelixconfigs - felixconfigurations - bgppeers - globalbgpconfigs - bgpconfigurations - ippools - globalnetworkpolicies - globalnetworksets - networkpolicies - clusterinformations - hostendpoints verbs: - get - list - watch #Calico must create and update some CRDs on startup. - apiGroups: ["crd.projectcalico.org"] resources: - ippools - felixconfigurations - clusterinformations verbs: - create - update #Calico stores some configuration information on the node. - apiGroups: [""] resources: - nodes verbs: - get - list - watch #These permissions are only requried for upgrade from v2.6, and can #be removed after upgrade or on fresh installations. - apiGroups: ["crd.projectcalico.org"] resources: - bgpconfigurations - bgppeers verbs: - create - update---apiVersion: rbac.authorization.k8s.io/v1beta1kind: ClusterRoleBindingmetadata: name: calico-noderoleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: calico-nodesubjects:- kind: ServiceAccount name: calico-node namespace: kube-system---在k8s-master节点查看calico是否处于running状态

kubectl get pods -n kube-system

显示如下,说明calico部署正常,如果calico没有部署成功,cordns会一直显示在ContainerCreating

calico-node-rkklw 1/1 Running 0 3m4scalico-node-rnzfq 1/1 Running 0 3m4scoredns-6955765f44-jzm4k 1/1 Running 0 25mcoredns-6955765f44-mmbr7 1/1 Running 0 25m在k8s-master节点查看STATUS状态kubectl get nodes

显示如下,STATUS状态是ready,表示集群处于正常状态

NAME STATUS ROLES AGE VERSIONk8s-master Ready master 9m52s v1.17.3k8s-node Ready <none> 6m10s v1.17.3在k8s-master节点操作kubectl apply -f kubernetes-dashboard.yaml

cat kubernetes-dashboard.yaml

#Copyright 2017 The Kubernetes Authors.#Licensed under the Apache License, Version 2.0 (the "License");#you may not use this file except in compliance with the License.#You may obtain a copy of the License at#http://www.apache.org/licenses/LICENSE-2.0#Unless required by applicable law or agreed to in writing, software#distributed under the License is distributed on an "AS IS" BASIS,#WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.#See the License for the specific language governing permissions and#limitations under the License.#Configuration to deploy release version of the Dashboard UI compatible with#Kubernetes 1.8.#Example usage: kubectl create -f <this_file>---apiVersion: v1kind: Secretmetadata: labels: k8s-app: kubernetes-dashboard # Allows editing resource and makes sure it is created first. addonmanager.kubernetes.io/mode: EnsureExists name: kubernetes-dashboard-certs namespace: kube-systemtype: Opaque---apiVersion: v1kind: Secretmetadata: labels: k8s-app: kubernetes-dashboard # Allows editing resource and makes sure it is created first. addonmanager.kubernetes.io/mode: EnsureExists name: kubernetes-dashboard-key-holder namespace: kube-systemtype: Opaque---# ------------------- Dashboard Service Account ------------------- #apiVersion: v1kind: ServiceAccountmetadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard-admin namespace: kube-system---# ------------------- Dashboard Role & Role Binding ------------------- #apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: kubernetes-dashboard-admin namespace: kube-systemroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-adminsubjects:- kind: ServiceAccount name: kubernetes-dashboard-admin namespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: name: cluster-watcherrules:- apiGroups: - ‘*‘ resources: - ‘*‘ verbs: - ‘get‘ - ‘list‘- nonResourceURLs: - ‘*‘ verbs: - ‘get‘ - ‘list‘- apiGroups: [""] resources: ["secrets"] resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"] verbs: ["get", "update", "delete"] # Allow Dashboard to get and update ‘kubernetes-dashboard-settings‘ config map.- apiGroups: [""] resources: ["configmaps"] resourceNames: ["kubernetes-dashboard-settings"] verbs: ["get", "update"] # Allow Dashboard to get metrics from heapster.- apiGroups: [""] resources: ["services"] resourceNames: ["heapster"] verbs: ["proxy"]---# ------------------- Dashboard Deployment ------------------- #apiVersion: apps/v1kind: Deploymentmetadata: name: kubernetes-dashboard namespace: kube-system labels: k8s-app: kubernetes-dashboard kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcilespec: selector: matchLabels: k8s-app: kubernetes-dashboard template: metadata: labels: k8s-app: kubernetes-dashboard annotations: scheduler.alpha.kubernetes.io/critical-pod: ‘‘ seccomp.security.alpha.kubernetes.io/pod: ‘docker/default‘ spec: priorityClassName: system-cluster-critical containers: - name: kubernetes-dashboard image: k8s.gcr.io/kubernetes-dashboard-amd64:v1.10.1 resources: limits: cpu: 100m memory: 300Mi requests: cpu: 50m memory: 100Mi ports: - containerPort: 8443 protocol: TCP args: - --auto-generate-certificates volumeMounts: - name: kubernetes-dashboard-certs mountPath: /certs - name: tmp-volume mountPath: /tmp livenessProbe: httpGet: scheme: HTTPS path: / port: 8443 initialDelaySeconds: 30 timeoutSeconds: 30 volumes: - name: kubernetes-dashboard-certs secret: secretName: kubernetes-dashboard-certs - name: tmp-volume emptyDir: {} serviceAccountName: kubernetes-dashboard-admin tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule---# ------------------- Dashboard Service ------------------- #kind: ServiceapiVersion: v1metadata: labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kube-systemspec: ports: - port: 443 targetPort: 8443 selector: k8s-app: kubernetes-dashboard type: NodePort---kind: IngressapiVersion: extensions/v1beta1metadata: name: dashboard namespace: kube-system annotations: kubernetes.io/ingress.class: traefikspec: rules: - host: dashboard.multi.io http: paths: - backend: serviceName: kubernetes-dashboard servicePort: 443 path: /查看dashboard是否安装成功:

kubectl get pods -n kube-system

显示如下,说明dashboard安装成功了

kubernetes-dashboard-7898456f45-8v6pw 1/1 Running 0 61s

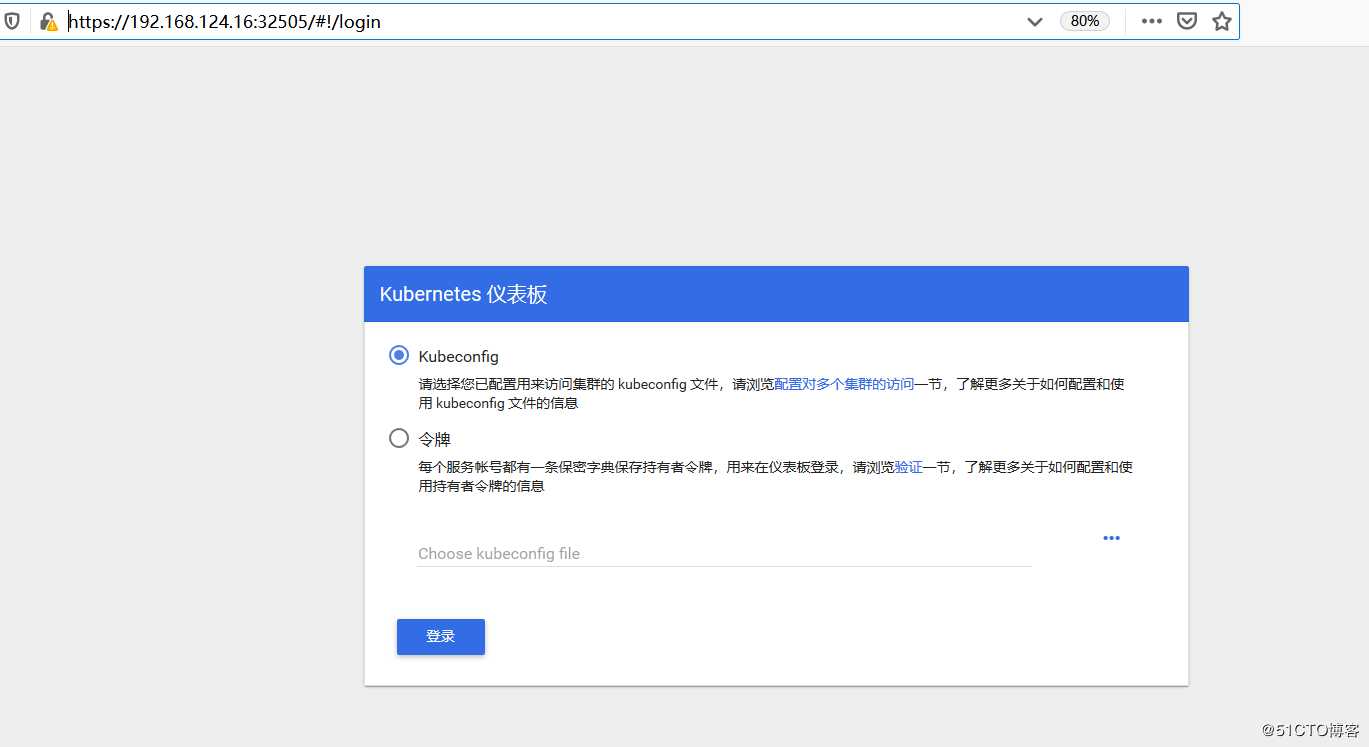

查看dashboard前端的service

kubectl get svc -n kube-system

kubernetes-dashboard NodePort 10.106.68.182 <none> 443:32505/TCP 12m

上面可看到service类型是NodePort,访问k8s-master节点ip:32505端口即可访问kubernetes dashboard,我的环境需要输入如下地址

https://192.168.124.16:32505/

5.安装metrics监控插件

在k8s-master节点操作

kubectl apply -f metrics.yaml

cat metrics.yaml

apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: metrics-server:system:auth-delegator labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: ReconcileroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:auth-delegatorsubjects:- kind: ServiceAccount name: metrics-server namespace: kube-system---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata: name: metrics-server-auth-reader namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: ReconcileroleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: extension-apiserver-authentication-readersubjects:- kind: ServiceAccount name: metrics-server namespace: kube-system---apiVersion: v1kind: ServiceAccountmetadata: name: metrics-server namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRolemetadata: name: system:metrics-server labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcilerules:- apiGroups: - "" resources: - pods - nodes - nodes/stats - namespaces verbs: - get - list - watch- apiGroups: - "extensions" resources: - deployments verbs: - get - list - update - watch---apiVersion: rbac.authorization.k8s.io/v1kind: ClusterRoleBindingmetadata: name: system:metrics-server labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: ReconcileroleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: system:metrics-serversubjects:- kind: ServiceAccount name: metrics-server namespace: kube-system---apiVersion: v1kind: ConfigMapmetadata: name: metrics-server-config namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: EnsureExistsdata: NannyConfiguration: |- apiVersion: nannyconfig/v1alpha1 kind: NannyConfiguration---apiVersion: apps/v1kind: Deploymentmetadata: name: metrics-server namespace: kube-system labels: k8s-app: metrics-server kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v0.3.1spec: selector: matchLabels: k8s-app: metrics-server version: v0.3.1 template: metadata: name: metrics-server labels: k8s-app: metrics-server version: v0.3.1 annotations: scheduler.alpha.kubernetes.io/critical-pod: ‘‘ seccomp.security.alpha.kubernetes.io/pod: ‘docker/default‘ spec: priorityClassName: system-cluster-critical serviceAccountName: metrics-server containers: - name: metrics-server image: k8s.gcr.io/metrics-server-amd64:v0.3.1 command: - /metrics-server - --metric-resolution=30s - --kubelet-preferred-address-types=InternalIP - --kubelet-insecure-tls ports: - containerPort: 443 name: https protocol: TCP - name: metrics-server-nanny image: k8s.gcr.io/addon-resizer:1.8.4 resources: limits: cpu: 100m memory: 300Mi requests: cpu: 5m memory: 50Mi env: - name: MY_POD_NAME valueFrom: fieldRef: fieldPath: metadata.name - name: MY_POD_NAMESPACE valueFrom: fieldRef: fieldPath: metadata.namespace volumeMounts: - name: metrics-server-config-volume mountPath: /etc/config command: - /pod_nanny - --config-dir=/etc/config - --cpu=300m - --extra-cpu=20m - --memory=200Mi - --extra-memory=10Mi - --threshold=5 - --deployment=metrics-server - --container=metrics-server - --poll-period=300000 - --estimator=exponential - --minClusterSize=2 volumes: - name: metrics-server-config-volume configMap: name: metrics-server-config tolerations: - key: "CriticalAddonsOnly" operator: "Exists" - key: node-role.kubernetes.io/master effect: NoSchedule---apiVersion: v1kind: Servicemetadata: name: metrics-server namespace: kube-system labels: addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/cluster-service: "true" kubernetes.io/name: "Metrics-server"spec: selector: k8s-app: metrics-server ports: - port: 443 protocol: TCP targetPort: https---apiVersion: apiregistration.k8s.io/v1beta1kind: APIServicemetadata: name: v1beta1.metrics.k8s.io labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcilespec: service: name: metrics-server namespace: kube-system group: metrics.k8s.io version: v1beta1 insecureSkipTLSVerify: true groupPriorityMinimum: 100 versionPriority: 100上面组件都安装之后,kubectl get pods -n kube-system,查看组件安装是否正常,STATUS状态是Running,说明组件正常,如下所示

NAME READY STATUS RESTARTS AGEcalico-node-rkklw 1/1 Running 0 4h29mcalico-node-rnzfq 1/1 Running 0 4h29mcoredns-6955765f44-jzm4k 1/1 Running 0 4h52mcoredns-6955765f44-mmbr7 1/1 Running 0 4h52metcd-k8s-master 1/1 Running 0 4h52mkube-apiserver-k8s-master 1/1 Running 0 4h52mkube-controller-manager-k8s-master 1/1 Running 1 4h52mkube-proxy-jch6r 1/1 Running 0 4h52mkube-proxy-pgncn 1/1 Running 0 4h43mkube-scheduler-k8s-master 1/1 Running 1 4h52mkubernetes-dashboard-7898456f45-8v6pw 1/1 Running 0 177mmetrics-server-5cf9669fbf-bdl8z 2/2 Running 0 8m19s为了写这篇文章,笔者也是煞费苦心,全文字数已经达到一万字,文章内容较多·,知识总结也很完善,只要各位按照步骤操作,完全可以搭建一套k8s集群,想要继续学习k8s后续知识,获取各版本k8s免费安装视频,可按如下方式进入技术交流群获取哈~~

写给大家的一句话:

如果每天能进步一点点,那么每个月,每年就会进步一大截,继续冲吧~~